I am currently a postdoctoral researcher at the Thrust of Intelligent Transportation (Systems Hub), The Hong Kong University of Science and Technology (Guangzhou). I received my Ph.D. from the National Key Laboratory of Human-Machine Hybrid Augmented Intelligence, Xi’an Jiaotong University. During my Ph.D., I was also a visiting student at the College of Computing and Data Science, Nanyang Technological University, under the supervision of Prof. Shijian Lu.

My research interests lie in spatial perception and understanding. I aim to develop advanced techniques that enable autonomous agents to perceive and understand real-world environments effectively, especially in dynamic and open-world settings.

🔥 News

- 2025.07: 🎉🎉 One paper is accepted in ICCV 2025!

- 2024.12: 🎉🎉 One paper is accepted in T-PAMI!

📝 Publications

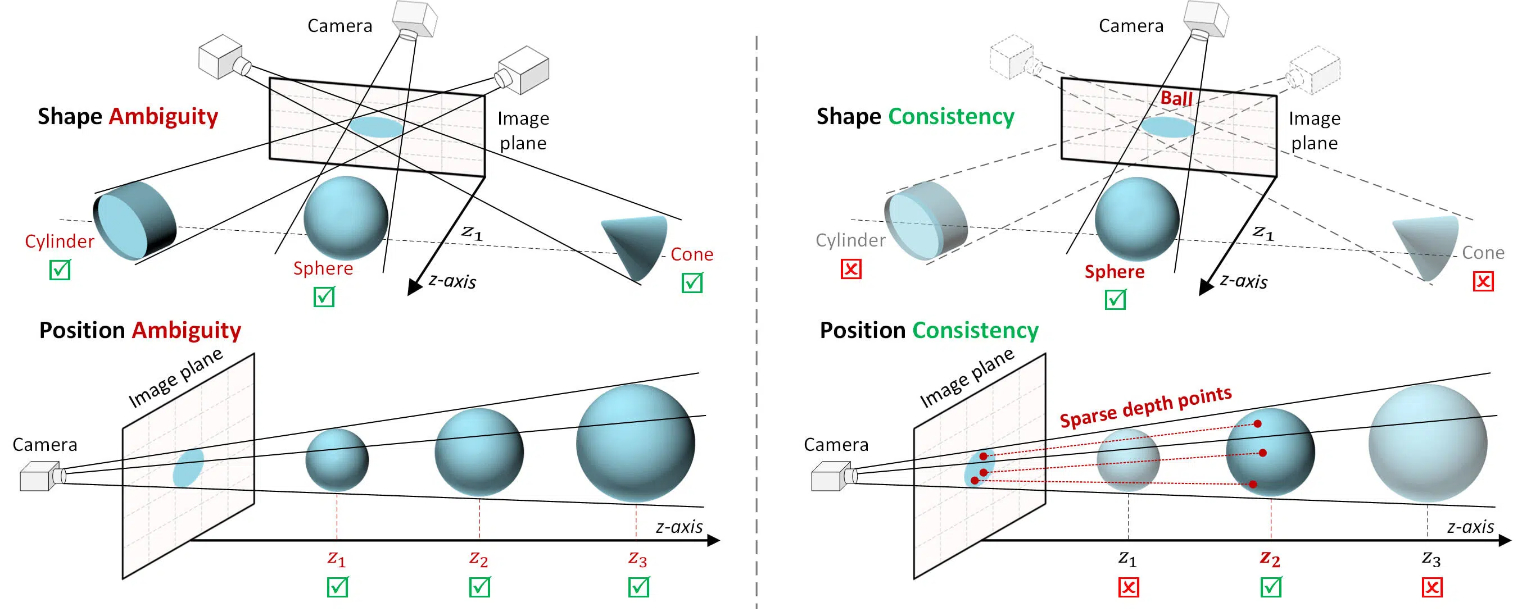

PacGDC: Label-Efficient Generalizable Depth Completion with Projection Ambiguity and Consistency

H. Wang, A. Xiao, X. Zhang, M. Yang, and S. Lu

PacGDC is a label-efficient technique that enhances data diversity with minimal annotation effort for generalizable depth completion. It builds on novel insights into inherent ambiguities and consistencies in object shapes and positions during 2D-to-3D projection, allowing the synthesis of numerous pseudo geometries for the same visual scene. These insights contribute to the model’s strong generalizability across diverse scene semantics/scales and depth sparsity/patterns under both zero-/few-shot settings. Project Page

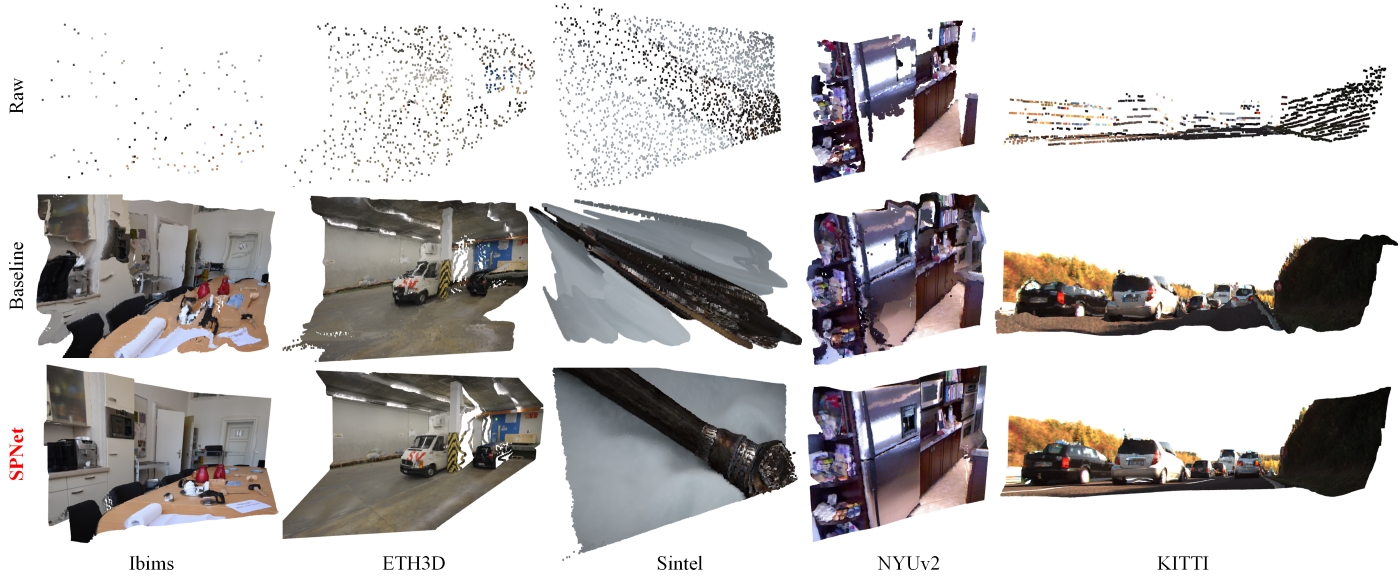

Scale Propagation Network for Generalizable Depth Completion

H. Wang, M. Yang, X. Zheng, and G. Hua

SPNet is the first to carefully analyze the significance of scale propagation property in generalizable depth completion. To satisfy this property, we introduce SP-Norm, a novel normalization layer that enhances generalizability and ensures efficient convergence. Coupled with our insights for ConvNeXt V2, SPNet achieves impressive generalization across a wide range of unseen scenarios. Project Page

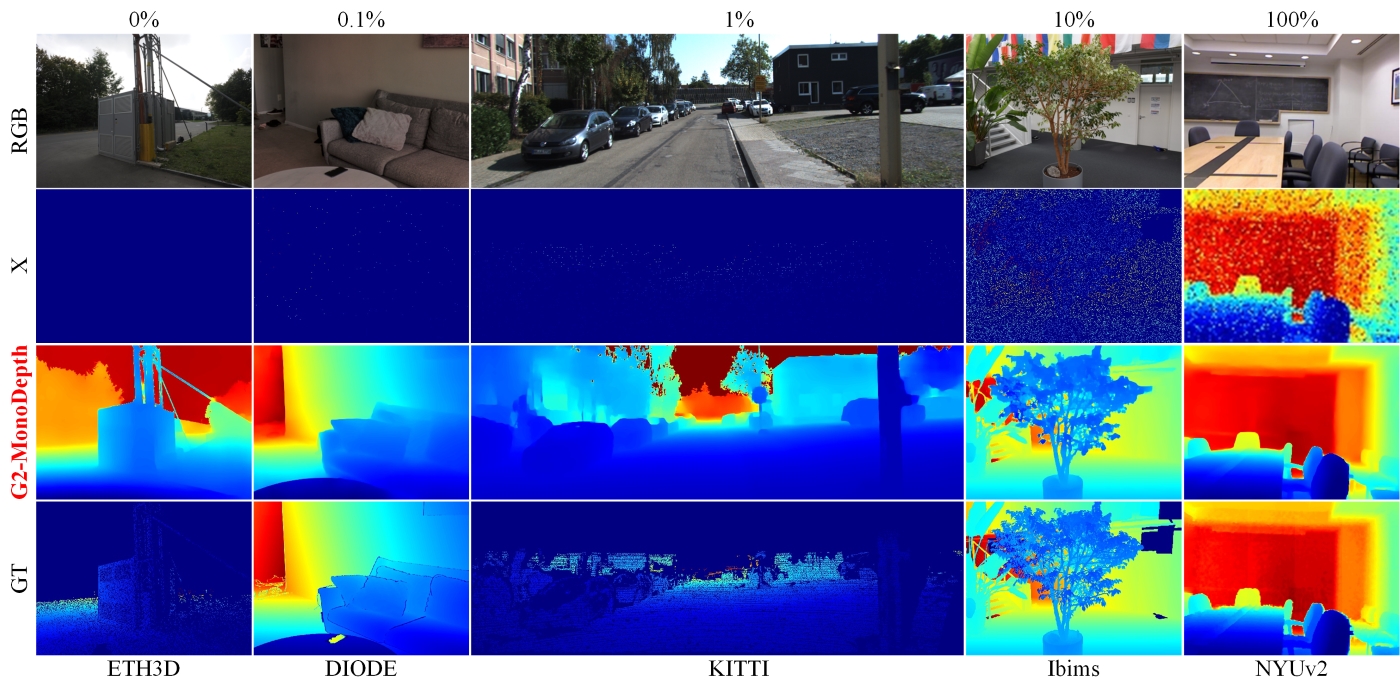

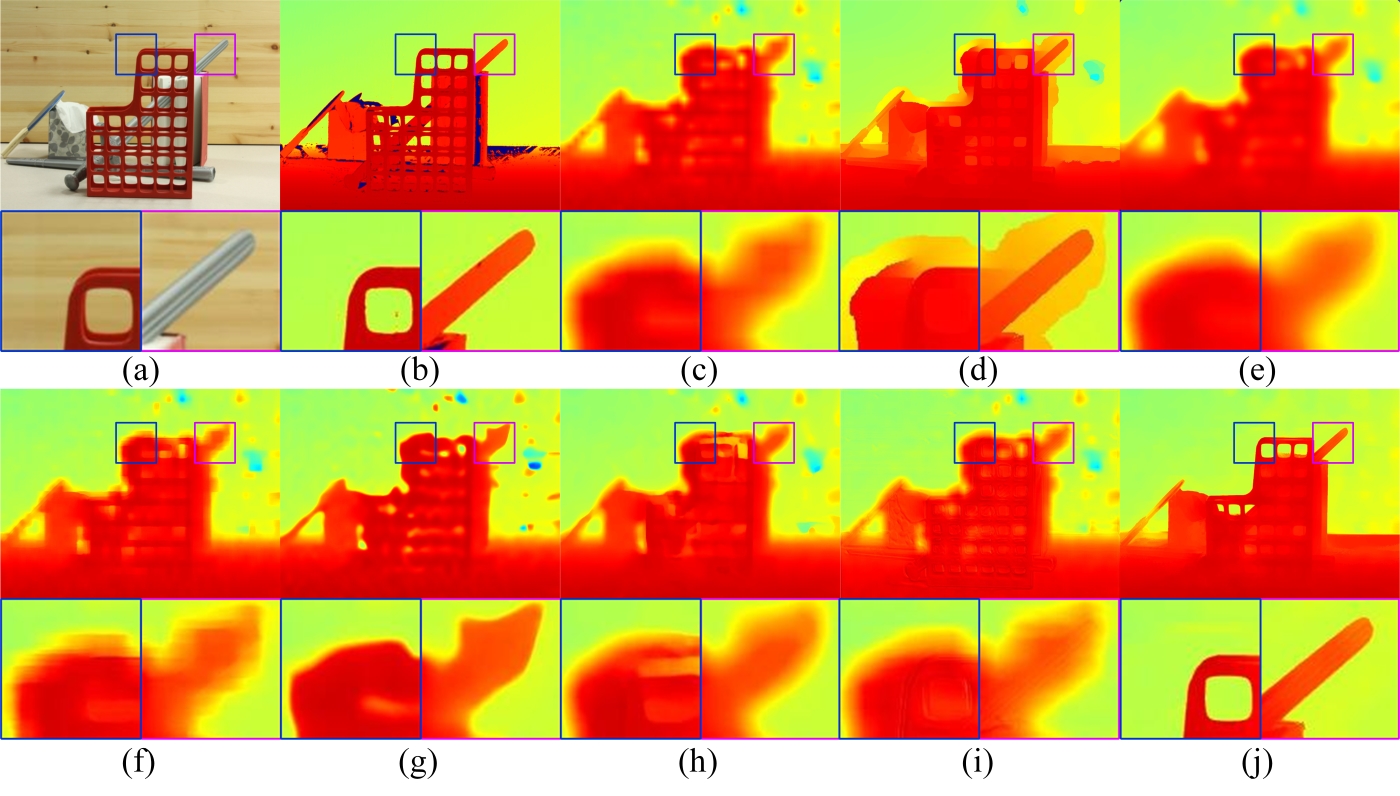

G2-MonoDepth: A General Framework of Generalized Depth Map Inference from Monocular RGB-X Data

H. Wang, M. Yang, N. Zheng

G2-MonoDepth investigated a novel unified task of single-view depth inference for various robots, which may be equipped with a range of sensors, such as cameras, LiDAR, ToF, or their combinations, and operate in diverse indoor and outdoor environments. Our work introduced a general and generalized framework to robustly perform in this challenging scenario by comprehensively exploring data preparation, network architecture, and loss function. Project Page

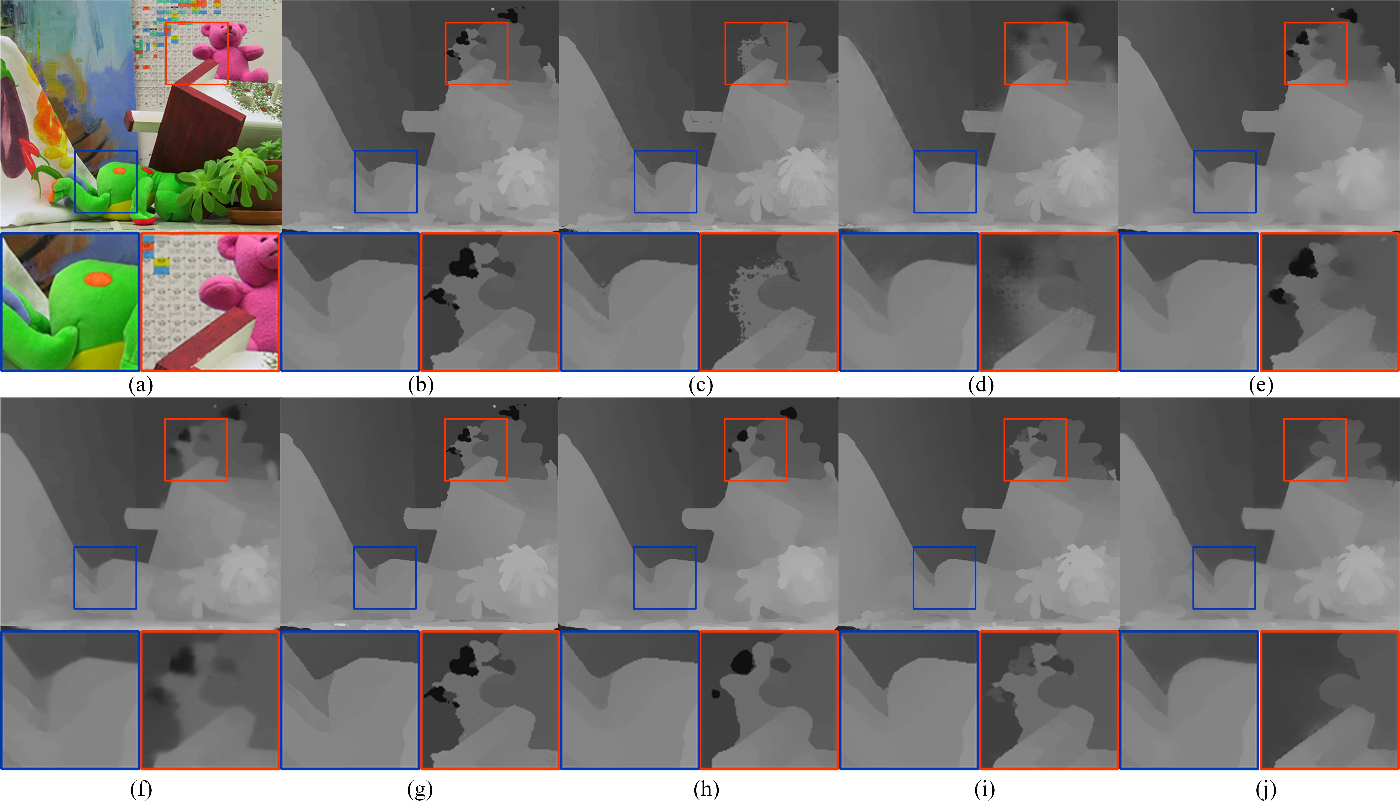

RGB-Guided Depth Map Recovery by Two-Stage Coarse-to-Fine Dense CRF Models

H. Wang, M. Yang, C. Zhu, N. Zheng

This work aims to effectively eliminate large erroneous regions in depth maps captured by low-cost depth sensors without training. It is achieved by an optimization-based approach employing the fully connected conditional random field (dense CRF) model to integrate local and global contexts from RGB-D pairs in a coarse-to-fine manner, achieving superior performance and even outperforming learning-based techniques. Project Page

Depth Map Recovery based on a Unified Depth Boundary Distortion Model

H. Wang, M. Yang, X. Lan, C. Zhu, N. Zheng

This work is the first to establish a unified model capable of identifying all kinds of distorted boundaries in depth maps, including missing, fake, and misaligned boundaries. Leveraging this unified model, we developed a filter-based pipeline to iteratively optimize low-quality depth maps, resulting in high-quality depth maps with accurate boundaries. Project Page

📖 Educations

- 2019.09 - 2025.06, Ph.D., College of Artificial Intelligence, Xi’an Jiaotong University (XJTU), China.

- 2023.12 - 2024.12, Visiting Ph.D., College of Computing and Data Science, Nanyang Technological University (NTU), Singapore.

- 2013.09 - 2017.06, B.S., School of Electrical and Electronic Engineering, North China Electric Power University (NCEPU), China.

🏅 Honors and Awards

- 2025.06, Outstanding Graduate, XJTU.

- 2025.01, Academic Star of the IAIR (Top 1%), XJTU.

- 2024.10, Excellent Postgraduate Student, XJTU.

- 2024.09, Baosheng Hu Scholarship (Top 5%, 1st Place), XJTU.

- 2024.01, Academic Star of the IAIR (Top 1%), XJTU.

- 2023.10, Academic Scholarship (Top 5%, 1st Place), XJTU.

- 2023.10, Qianheng Huang Scholarship, XJTU.

- 2023.07, Joint Ph.D. Scholarship (6700 people in China), China Scholarship Council (CSC).

📕 Patents

- 2024, M. Yang*, H. Wang, N. Zheng. Zero-Shot Depth Completion Based on Scale Propagation Normalization Layer: Method and System. China Patent, No. 2023101807430.

- 2023, M. Yang*, H. Wang, N. Zheng. Generalizable Depth Map Inference with Single-View: Method and System. China Patent, No. 2023101807430.

- 2021, M. Yang*, H. Wang, N. Zheng. Depth Map Structure Restoration Method based on the Fully Connected Conditional Random Field Model. China Patent, No. ZL202111057715.2.

- 2020, M. Yang*, H. Wang, N. Zheng. An Iterative Method of Depth Map Structure Restoration based on Structural Similarity between RGB and Depth. China Patent, No. ZL200010007508.X.

🔨 Projects

- 2022.12 - 2025.06, A General Model of Single-View 3D Perception for Multi-Modal Autonomous Agents. Responsibility: Core Member, Source: No. 62373298, The National Natural Science Foundation of China.

- 2022.01 - 2023. 12, A General Depth Perception Model. Responsibility: Project Leader, Source: No. xzy022022061, The Basic Research Foundation of XJTU.